It is Known with Joe Fitzsimons, part 2 of 2

Download MP3[00:00:00] Sebastian: The New Quantum Era, a podcast by Sebastian Hassinger and

[00:00:07] Kevin Rowney: Kevin Rowney.

Hi, uh, welcome back. Uh, this is, uh, now part two of our conversation with Joe Fitzsimons of Horizon Quantum Computing based in Singapore. Um, if you're the kind of person that likes hearing the entire context, we would encourage you to listen to the prior episode, but if you just wanna jump on in, please feel free to continue.

Uh, without any further ado, here it is the second part to our interview with.

[00:01:23] Sebastian: It's interesting to me like that, that feels like the evolutionary path of this turning into a technology is in that interplay between the theorists and the experimentalists, right? Yes. The two for sure. Sort of, you know, main tribes of, of physicists, working around and, uh, and through each other's breakthroughs to, to arrive to something that's actually, that becomes a useful.

It's a really, it's a fascinating process.

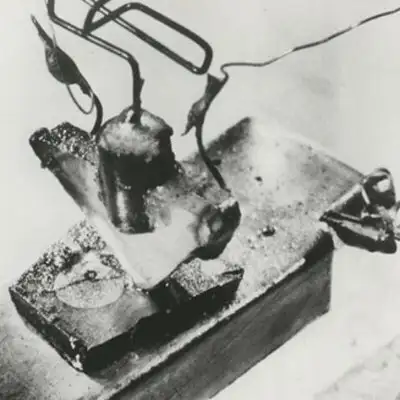

[00:01:46] Kevin Rowney: It really is. I mean, maybe my history, my, my knowledge of history of early technology development is flawed, but I have the impression that lots of the, you know, early explosive eras of, of invention, I mean electricity and silicon. Sure. Many of them were personalities just in that, in that mid-range.

I mean, both. Both theists and experimentalists, uh, yeah. These, these entrepreneurs who somehow took bit bits and pieces of both cultures and ran with it. Yeah. Well, and,

[00:02:09] Sebastian: and I mean, to your point Joe, like you can only go so far with sort of the, you know, the, the elegant understanding of the theoretical. Um, foundations of a field, you have to then build it and see how well your predictions, um, turned out and then adjust.

Right? I mean, and then, and then the great thing is, as you're saying, there's all these strategies that emerge from the ground truth of the actual physical object that that gets produced, and, and the, the lessons you learn from operating it, it's really interesting.

[00:02:39] Joe F: Yeah. I mean, we, we see this from a different direction as well, um, in horizon.

So I, I mean, , you know, uh, my group was a theory group. Uh, we're a software company and you, you know, I guess it's often seen as software and theory being kind of hand on hand. Um, , but the gap between a paper and an implementation and software is enormous. Yes. God. Yeah. So I mean, there's plenty of things. I mean, even within the company, you talk to people and they say, oh, it's known how to do this.

There's a big gap between It is known and anyone being able to actually do it. Yes. Uh, so I mean, we see this all the time, like, , you know, like an example of this is the, the Hhl algorithm, right? Um, so this is a, a algorithm for, uh, estimating expectations of the inverse of a matrix. So you're trying to invert some matrix A and you want to compute something like X transfers a inverse X, um, or maybe the, the trace of a inverse or something like this.

it turns out you can do this in poly algorithmic time, uh, whereas classically you would expect it to take about time. That scales with the cube of the size of the matrix. Mm-hmm. . So there's an enormous quantum advantage to be had here. Mm-hmm. . Um, it turns out to be really widely applicable. There's a lots of machine learning models where this is a, an important step.

There's lots of other applications, um, in, in other, in, in engineering domains and. , uh, in all sorts of different domains where this is important, but it turns out the algorithm is relatively straightforward. You simulate the evolution of, uh, of your matrix, a as if it was a Hamiltonian, which is something we know how to do in principle.

Um, and then, , uh, you're gonna do that in a kind of controlled way of some utter register that's gonna tell you how long forward to, uh, evolve it for. But that register's gonna be in super position. Uh, so okay, we know how to add a control more than us. Um, so you're gonna run that forward some for some amount of time.

And then you're gonna do a quantum for your transform, and then you're going to rotate an SCU it by some angle. That's gonna depend on the arc sign. Uh, something that's spelt out in cubits and all of that seems reasonable. You can explain all of this to a few lines of pseudo code. Each one of those lines is extremely difficult.

you know, um, okay. Content for transform straightforward, uh, I can write down that circuit. That's no problem. Hamilton. Uh, simulation. So simulating e to the minus i a t, uh, for some, some value of T and some metrics. A that's difficult. That's a compute

[00:05:42] Kevin Rowney: unitary transformation for from the Hamiltonian. Yeah.

Okay. Right. Yeah. Yeah. So

[00:05:46] Joe F: there's, it is an active area of research, uh, how to, how to best approximate this if you have, yeah. And,

[00:05:51] Kevin Rowney: and in theory it's such a concise and powerful expression. But yes, I mean, in practice it's. A handful. Yeah, so

[00:05:57] Joe F: we, I mean, we know roughly the complexity of doing it, but actually the best way of doing it is, you know, something that.

Gone through many steps, um, you know, gone through quite a lot of evolution over the last number of years. Um, but even the simplest thing, rotating a qubit by, you know, you wanna rotate one over a number that's spelt out. Sorry, excuse me. You have a number that's spelled out in cubits and you wanna rotate.

Um, , you wanna rotate an sil bit, uh, by an angle that's given by the arc sign of one over that number. Um, that should be easy, right? On a classical computer, it's really easy to compute sounds, sounds easy in Python, right? Yeah, yeah, yeah, yeah, yeah. . So, you know, what, what do you need to write down? You need to write down, you know, like a cause one over whatever the number is.

And that's it. And I'll give to you. You know, what's the quantum circuit for that? Hmm. Like, that's, that's

[00:07:04] Kevin Rowney: really tricky. How, how about the faintest idea, by the way? Yeah. .

[00:07:08] Joe F: I mean, how would you even approach doing it? Yeah, you can look it up. Some, some people have written research papers trying to explicitly write ones for it, but like, that's a tricky step.

Hmm. Um, now it's known. Right, because we know you can compile c we know you can. We know that like we know that classical computing is equivalent to classical reversible computing and we know you can write this in Python, so you must be able to write it as so it there

[00:07:38] Kevin Rowney: must be some way to get it done.

Yeah, yeah. Right.

[00:07:40] Joe F: There is some way, but what, it's the way on Exactly how many gates does it meet?

[00:07:46] Sebastian: Right. That's, and, and is it, is it a combination of, of the comp, like how big the circuit is and how complex? Or is it just the complexity of, of actually formulating the circuit? Oh,

[00:07:57] Joe F: so, so I mean, just, I mean, even just coming up with the circuit, it's something that is tricky.

Mm-hmm. , so like, one of the things we focused on, um, that we've been building into our system is just the ability to compile from. . So you write some cloud and C and we can compile it to some gates and that's okay because there's like, you can compile C to, uh, compiler ir and then there's only so many operations.

And if you know how to do each of these operations well, clever people have already figured out how to compute, sign the mapping and C and so on, and you can start taking advantage of the existing libraries. Mm-hmm. . ,

[00:08:38] Kevin Rowney: but it's, uh, well, it's fascinating. So that's one of the core technologies, right? Um, of your operation.

Yes. It's these, these compilers that have this Sure. Broad fluency for compiling down to these Yeah, yeah. Alternative architectures. Do I have that

[00:08:49] Joe F: right? Uh, yeah. But I mean, this is, this is certainly part of our system. Um, but I, I mean, just in terms of the barriers I, in, in terms of the things that, you know, are.

Seen as, you know, known how to do, but like the gap right between the, the, the theoretical result that yes, it's known, this can be done in polynomial time and actually doing it in polynomial time, you know, in, in some concrete number of steps. That's, that's quite a big gap. Hmm. Um, and is it,

[00:09:18] Sebastian: is it your thinking that you would compile c to, uh, to quantum gates and, and then start to optimize?

You'd have something that runs, and then you'd start to optimize the implementation to try to get to sort of the, the, the, the best performing. Oh yeah.

[00:09:35] Joe F: So, so what we do at the moment, um, in the system, so we shut off, uh, a first look at our ide at, uh, Q2 B last month. Oh, sorry. Actually we're February now, so I guess, uh, in December.

Yeah. Um, and, uh, you know what we. What we showed in that was a basic like quantum programming language, so it has the same kind of flow control as basic, um, along with the ability to include sub routines written in CRC plus plus. . So you can just import, uh, what we call a ctq file, um, that tells it how to interpret, uh, a, a function within a c file as a subroutine within your program and what optimizations to make.

Um, and when we run through the compiler, it generates the full circuit, you know, map it down to whatever hardware we're targeting, but it takes care of that construction. . Mm-hmm. . So like we've been trying to build the tools to bridge some of these gaps. Ultimately, where we're going is to try to automate the algorithm construction as well.

Mm-hmm. , at the moment, we're not accelerating the C so we're just constructing the reversible sub-routine. Uh, we're optimizing it, but we're classically optimizing the gate set. Okay. We're not constructing a quantum algorithm for the same task now. Gotcha. There are some. Optimizations where we, where it is kind of a quantum version.

Um, but it's not, there's no, there's no like polynomial speed up, we're only talking about constant factor speedups. Hmm. Um, at the moment. Um, so you know, that side of things, that's just pure classical compilation. Mm-hmm. , um, with some kind of clever constructions of quantum gates and so on. But that's, that's ultimately.

what you're doing at that level. Um, we're targeting above that. So we're, we're targeting automatically accelerating the programs as well. And, you know mm-hmm. , the way, the way to do this, um, is essentially if you look at, um, if you look at any, uh, Piece of computer code, like for the most part, you're, you can compile it down to assembly where basically you have a bunch of jump operations, so there's some flow control, and then you've essentially blocks of assignments that are getting evaluated.

So you've amatic expressions, for example, you've some, you have some circuit to be evaluated, and then some flow control graph that you're moving around that's telling you what's the next piece of the circuit. . Um, and it turns out you can accelerate both of these on a quantum computer. Mm-hmm. , so it's known that cer that circuits.

can be ex can be evaluated faster, at least certain, certain types of circuits can be, uh, accelerated. And again, with the flow control, you can use Grover, uh, you can use Grover like algorithms and things like this. Mm-hmm. To also speed that up, so, oh, interesting. Wow. When you, when you start looking at it from this perspective, it starts looking like quantum computing is very broadly.

Hmm. And I mean, I think this is part of my, um, it's part of my outlook on quantum computing, why I'm so optimistic about it, because I really see it as being applicable basically everywhere. Wow. Now there are some things we know we can't accelerate. We know that if you're trying to compute the party of a string of bits, for example, um, it's hard to do better than the best Costco algorithm.

and that's because the best classical algorithm is so good. But if you pick a really hard problem, for the most part, we tend to see some kind of quantum acceleration. Hmm. Um, you know, that's interesting. If you take ones where we can't, uh, make them polynomial time, but you take some mp hard problem, very often you see at least a square root acceleration for a quantum computer.

[00:13:29] Sebastian: Yeah. Um, that's, so that's a very bold claim, Joe, cuz I mean that's certainly It is. Yeah. You know, there's, there's sort of one of the, uh, the, the themes of the hand ringing about, um, you know, are, should we be investing so much time in quantum, is it ever gonna turn out to be as, as good as we think it is?

People tend to point to like the quantum algorithm Zoo and say there's only, you know, whatever, a dozen or less. Uh, really solid algorithms there that, that are proven to be advantageous. But you're, you think it's much more broadly applicable? There are

[00:14:02] Joe F: hundreds of algorithms on the Quantum Algorithm Zoo.

Okay. Um, what I would say is that there are only a small number of tricks we know of. Yes. Okay. Right. That, so there's only. , uh, a kind of, I mean, I guess you can categorize different quantum algorithms by quad tricks they're using Mm, mm-hmm. . Um, and so there's, you know, there's further transformed based tricks like period finding and different things like this.

There's amplitude amplification and that gives you, uh, but gives you kind of grow research and also gives you quantum walks and things like this. Mm-hmm. , um, there's kind of antibiotic stuff and uh, but you know, there's various different categor. Mm-hmm. . Mm-hmm. that you can come up with and

[00:14:45] Kevin Rowney: there's not, I gather topological algorithms as well.

Yeah. That there is some exactly. Significant faces in that direction. Yeah. There's

[00:14:52] Joe F: not all that many different techniques at the moment. Okay. But each time we discover a new technique, we get lots out of it. Got it. Um, so

[00:15:00] Sebastian: they're not, they're not recognizing how broadly applicable those basic techniques are that Yeah.

Got.

[00:15:06] Joe F: Yeah, so I mean, if you're in a situation, like if I gave you a magic technique that said, every time you write a four or a wild loop, it's gonna take only the square root of the number of evaluations you require, classically, then it doesn't matter that that's only one thing. Right. It's just applicable to everything.

Yes. Right. And

[00:15:28] Kevin Rowney: that's, that's really interesting, Joe, you're, you're, you're almost positing a possible future where, you know, as a, as an average every day work a day coder, you could just, while you're compiling your code for a for loop, uh, invoke quantum , it would just automatically, you know, accelerate rapidly Right.

Queue. Yeah, exactly right. Yeah.

[00:15:48] Joe F: So does what we've been finding. Horizon. Um, you know, it's a long path to build a system that can do this. Yes. Uh, so we've had to build up, like we've had to build up the ability to compile, you know, pieces of classical algorithms, you know, pieces like classical submarines, uh, to be able to come up with our own quantum programming language that can incorporate these, but that are true and complete.

So we're not just compiling circuits. Because one of the things you immediately run into is what do we do about Wild Loop? . Um, mm. Because you know, everything you run on a quantum computer today is essentially a circuit, um mm-hmm. , but a wild loop. You can't write as a circuit for the most part. . Mm-hmm. . So, you know, what are you gonna do about that?

Um, you just can't encode a Turing machine as a circuit. Um, so we've been building up

[00:16:39] Sebastian: those tools. We need some sort of control structures is what

[00:16:41] Joe F: you're saying, ? Yeah. Yeah, yeah, yeah. So we've been building up those tools, we've. Built up already, um, some demos where we automatically refactor a subset of MATLAB code and construct quantum algorithms from that.

So pulling apart loops into simpler and simpler elements, recognizing which each of these simple elements does, and replacing it with a more efficient way of doing the same thing. It does not accelerate everything. There are certain kinds of elements we don't know how to accelerate, but there's lots we do know how to accelerate.

Mm-hmm. . Um, and that, that might be,

[00:17:13] Sebastian: I mean, I, I wonder if that's another theme in your sort of, uh, reasoned optimism is that I don't know that people are grasping. This goes back to what I was saying about sort of shooting for that, you know, shore machine, let's call it. Um, It. People don't really recognize how much we can get out of these early stages if we're implementing classical and quantum in a hybrid kind of architecture,

[00:17:39] Joe F: right?

So I wanna be a bit careful here, uh, to not mislead people in terms of my own beliefs. Um, there is quite a big effort, uh, to gain an advantage. With near term systems that have higher error rates or have moderately higher rates. Um, so that where, at least where errors are still present. Mm-hmm. , um, and

[00:18:06] Kevin Rowney: I, what, what is called the, the nisra of Yeah.

The

[00:18:09] Joe F: Nisra. Yeah. Yeah. Yeah. And personally, I'm skeptical that we will see many Nest applications. I, I would absolutely believe that it's possible to see one for c. . Mm-hmm. . Um, I don't believe about General Optimiz. I think that's, I, if,

[00:18:26] Kevin Rowney: if I could just chime in on that, that very point, because I mean, we've been of course, watching this n uh, phase and heard many people speculate about the plausible, uh, you know, AIO quantum algorithms translated onto Nsra hardware with a even a small number of cubis.

Making big breakthroughs, but just in the last year or so, I mean, there's gigantic and radical evolutions, right, in molecular geometry algorithms for classical computing where they've, you know, many chemists have just abandoned AIO techniques for classical computing, and they're just using, you know, ML techniques and huge breakthroughs.

I mean, I, it, it seems like there's a, a possible conclusion in the minds of some that the, you know, the threshold. Quantum advantage in, in chemistry is, is receding. You would all agree ?

[00:19:13] Joe F: Yeah. Yeah. So I'm not a chemist, so I'm not really well placed to talk about which are the easy, uh, to simulate and which are hard.

My understanding is that certain classes are relatively straightforward to model on, uh, classical computers and you can get good results from, um, but with, you know, different ways you can go depending on. certain, uh, when certain elements are included can make difficult. But also, uh, the other side of this is things like, uh, large flexible, large flexible molecules and things like this.

Mm-hmm. . Um, and how, how the modeling of that goes. So, you know, whether. ML techniques there lead to accurate predictions or just plausible looking predictions? Yeah, right. Always. I don't, I dunno what way things get there. The ultimate think

[00:20:06] Sebastian: until you make it technology. Exactly. ML is just like, I mean, it looks right.

Yeah.

[00:20:11] Joe F: So, I mean, I don't, I don't know. Uh, so this is something that was done, uh, I think quite a lot in the film industry with um, uh, with. . So when you're simulating, when you're like, uh, rendering a sea, an ocean or something like this, um, that actually simulating the computational food dynamics for that is really difficult.

Um, but you don't need to, you just need to make something that looks oceany, right? I'm saying fire, right? Like you wanna simulate fire, like that's a really complex simulation, but you don't actually need the full simulation to fool a person because a person can't. . Right,

[00:20:52] Kevin Rowney: right. Just to make you good movie.

But if you want any real physics or engineering, it's, it's entirely different

[00:20:57] Joe F: question. Yeah. So, um, yeah, so I, I mean I, I found that kind of interesting when I saw that there was some like cut down version of fluid mechanics that was being used in some movies to give like very realistic looking, right.

Uh, scenes. But it's obviously like this bit of foam here actually would not be here. Right. mm-hmm. in reality. Um, yeah. So it's, uh, yeah, I mean, for chemistry. And I just don't know where the lines lie. I'm not a chemist. Right. Um, so I don't work on chemistry algorithms either. Um, but it seems a very plausible to me that that is an area where we would see an advantage because the systems are just so analogous.

Right. I, I mean, you're using a quantum mechanical system to say another quantum mechanical system. That quantum mechanical system that you are trying to simulate out there in the, in the real world is subject to noise as well. It's interacting with its environment. Mm-hmm. . Exactly. So, so you would expect to be able to make predictions.

where even if the noise is present, if you can shape it in the right way right. So that it looks like environmental noise that Oh, interesting. That particular, yeah. Uh, atom will be seeing, or that particular molecule will be seen, then you would still expect to have good advantage and get great predictions out of it.

So that seems plausible to me, um, because nature has noise in. So as long as you can just shape the noise so that they match, then it seems plausible to me that that things work, work out. Um, but

[00:22:26] Sebastian: for the broader, the more broad, uh, sets of, of advantages that you were describing, you're, you're thinking fault tolerance is, is sort of the, the, the base requirement

[00:22:36] Joe F: or little noise.

So let me, let me say low noise regime rather than full tolerance. Okay. But, um, yeah, where your likelihood of having errors. Relatively low or the number of errors you're having is relatively low. Mm-hmm. . Mm-hmm. .

[00:22:51] Sebastian: I guess at that point you can, you can actually design around that with sort of the logical construction of your programming.

Like you can, if, if the errors are fairly rare, then you can have safeguards in your code for the error.

[00:23:02] Joe F: Yeah, yeah, yeah, exactly. Um, interesting. Yeah. So you don't necessarily need to do everything full tolerantly. Mm-hmm. If you know that your errors are rare, um, you know, there's, there's some nice results as well on like, what happens if you only have to protect against memory errors rather than processing errors.

Mm-hmm. Different kinds of things like this.

[00:23:27] Sebastian: Well, first you need memory, as you pointed

[00:23:29] Joe F: out, . Sure. But the memory could just be cubit that you're not dealing with at the moment. Yeah. So if, yeah, I, I mean there's, um, yeah, I mean, just in general, I think it's the case that in the n regime we have limited evidence that there's speed up.

from the existing algorithms for real world problems. And when, when you're in that regime and you're hoping that quantum computing will offer an advantage, I think there's a danger of the power of wishful thinking, taking over. Mm-hmm. . Mm-hmm. . And that you convince yourself of applications that are not gonna.

That are not gonna materialize. So I know I'm not a big believer in the power of wishful thinking, even though I'm extremely optimistic about the future of, right.

[00:24:19] Sebastian: And that, I think term that was exactly the, the what you conveyed in your, in your Twitter thread, just to come back full circle. I mean, uh, you know, if.

I if you, we go back to where you were saying, um, you know, the, the, the co-design, essentially the interplay between, um, our, our theoretical models and then, uh, you know, running on real hardware is what's going to lead to that. That, uh, at least the precipice of a breakthrough that you can then apply industrial scale to an industrial scale, capital to, um, it, it feels like that's really the lens that the nicar needs to be seen through is like, this is a necessary.

Collaborative, uh, project to get to the point where we can actually launch a viable technology that makes a big difference to a large number of

[00:25:08] Joe F: people. Y yeah, I mean, like people aren't, you know, no one is gonna build a quantum computer in their shed and have it. Take over, uh, you know, all of the microphones.

[00:25:20] Sebastian: I dunno, I've seen some movies from Marvel that would suggest

[00:25:22] Joe F: otherwise. . . Yeah. Um, so I mean, I'm not saying that it's not possible that at some point someone will build a quantum computer in their shed. Um, but, uh, what I am saying is I, you know, current state of the technology, if you want to be at the forefront of what's possible, it's really hard and quite expensive.

But, uh, yeah,

[00:25:46] Kevin Rowney: a big team, A lot of capital no other way at this point. Yeah. And

[00:25:49] Joe F: we need to go through these steps. There's not, there's not a fast forwarding, we can't just jump to a trillion cubits or something like this. Right, right. But, you know, and maybe the line is different. So for. Conventional computing, it had to beat people, right?

Like that was right. The benchmark. Maybe people with mechanical calculators or something like this, right? Like that's what you need to beat and log tables, right? If you can beat that, uh, , then you're, you know, you've, you're, you're useful. So, you know, you see it for sorting data. First of all, I guess like if you go to the Computer History Museum, you see all these punch guard machines mm-hmm.

uh, that are used in the census and stuff like this. Yeah. Um, but, uh, you know, by the time you get to the, you know, into the second World War, you start seeing, uh, you know, the first computer is being built to. , uh, well, to start breaking codes essentially. Mm-hmm. and then other uses. Yeah. And simulate physics,

Yeah, exactly. Yes. It's like, it's exactly the same. Like I know. If you wanted a reason to be optimistic. Yeah. Like what are the very first things that conventional computers are used for, right. Like breaking codes and simulating physics. Yeah. You know, history

[00:27:06] Sebastian: doesn't, history doesn't repeat itself, but it does.

[00:27:09] Joe F: Yes. . Yeah. Yeah. So I, I've got some slides that I show on the timeline of quantum algorithms and classical algorithms and like, you see them, like you see, uh, all the sorting algorithms as well early on and things like this. Like, well, Grover search is quite like that. Yeah. And you see, um, uh, you see like hashing and you've got quantum fingerprinting and you see, um, then you start seeing linear algebra.

And you see, well, oh yeah, we've got all of these, you know, h l methods for fasting, algebra and stuff like that. Mm-hmm. interesting parallels. Yeah. Yeah, yeah. And even machine learning. You think of machine learning as a very modern, uh, occurrence in computing. But the McCullough Pitts paper on the artificial neuron is from 1943 Mm.

Whoa. Yeah. Amazing. So like, it's right at the start. So amazing. Even this, even this qml, uh, trend that you see, like also HASAs in history and. , you know, when that's starting, the number, uh, like the amount of memory of any of these systems is small, tiny, yeah. Right? Yeah. It's not, it's, it's more than, it's more than the number of qubits we had in credit systems, but now we're getting, you know, now latest chip, I guess is 433 cubits, something that's right like this.

We're expecting to see thousands in the next couple of years. That's right. Like if you go to the computer history museum, , you can see like a kilobyte of ram. That's right. You know, you, you can see the block, you can see a far car moving.

[00:28:40] Sebastian: Handon. Chorus. Yeah, .

[00:28:42] Joe F: Exactly. Um, so we're getting to a point where the quantum computers are comparable.

You know, there's maybe a notion. , classical computers were, you know, didn't have errors, but they did. Mm-hmm. . Mm-hmm. . Um, you know, okay, maybe the errors were different in nature and so on. Uh, and certainly our thresholds for, uh, full tolerance and error correction are different. Um, but you're still getting into this regime where the devices are starting to look like computers.

Mm-hmm. and. , actually not even very old computers, they start to look maybe not that dissimilar from the kind of processors that were, you know, the first Intel processors and stuff like this. Mm-hmm. . Mm-hmm. . And that's not 1940 or something. Right. That's that's significantly later. Yeah. Um, and, you know, okay, they're noisy still.

if we can get down the noise and maybe we do need to go to full tolerance to, to squeeze it all out, maybe we're able to do some other tricks in terms of decoupling and other kinds of forms of protecting qubits that reduce the noise low enough for us to be able to do interesting things. Um, or maybe we see things like the, um, the kind of bozon encodings.

that have, uh, come up in super connecting systems in recent years. Right. And we start to see more progress with error correction using less physical hardware, um mm-hmm. Yes. Than, than is otherwise required. So you don't need a thousand physical qubits. You maybe need lots of levels, but it's, but it's still the one, the, you know, the transplant and the um, uh, and uh, you know, cavity mode and so on.

Right. Um, right then. , you know, then maybe you're in a situation where things start to look pretty interesting. The but the thing is, we're not competing against people. We're competing against computers there. Computers, yeah. Computers do get better and they get better quite quickly. Um, so maybe where the crossover point is, it's not the same as it was for conventional.

Right. So maybe it's not at, at the scale of, you know, uh, like an Antioch or univac or something like this. Like maybe it's, uh, maybe it's further beyond that. Maybe you need a better, a more developed system before you get there, right? But one thing we know is that it's really, really hard to simulate these systems.

Yeah. Okay. We don't have an absolute proof of that, but we're about as convinced of that as anything. Right. Um, so if you, you know, if you can give me a system with 10,000 perfect qubits. , there is no computer on earth. If you put all of the computers on Earth together, nothing can come close to simulator.

That's right. Um, so are there uses for it for sure,

[00:31:38] Sebastian: right? ? If

[00:31:39] Joe F: you try it, there has to be . Yeah. If you try to use it as an inhaler, will it solve an optimization problem faster? Like, probably not. You probably need to put more thought into your algorithms than that. Right. Um, but. For sure there will be interesting things it can do.

Um, interesting. Yeah. So, you know, like when it comes to the situation on nsc, you're right. It's, it's something we have to get through. If there were comu, if there were commercial use cases at this scale, um, fantastic. And it would be a big boon for the industry and there'll be money coming in mm-hmm. and that would drive things faster.

But we have to recognize that there may. , right, that we may need to get to bigger systems, but we know that for bigger systems, there's definitely things we can do. . Yeah. Uh, and I would argue that

[00:32:28] Kevin Rowney: there's a very, an interesting perspective, Joe, because, I mean, you know, I, I, so many people have been focusing so powerfully on the NIS era, are trying to raise people's optimism there.

But you're, you're really pointing towards, uh, a, a brighter future, farther out. Yeah. But, you know, yeah. Right. Where there's, there'd be a certain threshold of, uh, quantum era correction and cubic volume and error control that would, you know, really just open up a huge range of applications and your, your historical background mm-hmm.

that justifies the, it feels like it's a, . So now I want to politely corner you and ask you your, your, your point of view. I mean, what do you, would you be willing to speculate on the timeframe at which, you know, you, we really see a, a, a breakthrough, uh, you know, real live demonstration of commercially relevant, you know, uh, quantum superiority, quantum supremacy, um,

[00:33:16] Joe F: you

[00:33:16] Kevin Rowney: know, so I know that's a controversial term and please forgive me for being so I dunno forward with this one.

I just, I had

[00:33:22] Joe F: to ask. Sure. So, uh, . What I would say is, um, in sometimes, fortunately I don't have to, because I mentioned a report earlier, uh, in the podcast when, um, about the timeline to breaking our, say 2048. Yes. And that does give timeframes, so it asks people for their confidence level and different timeframes.

And it turns out that the majority of people, the majority of respondents switch from thinking it's less likely than not to, more likely than not at the 10 to 15 year time. . Mm-hmm. . So that's for breaking cryptography. I would say that you would expect to see applications before that. Mm-hmm. . So you would expect to see, uh,

So let's say applications outside of chemistry, I guess I would expect to see two to three years before you can break crypto about it, right? Mm-hmm. , um, chemistry. So like, so

[00:34:16] Kevin Rowney: let chemistry should be a hhl thing, for instance. I mean, do you think Hhl would be a, a prime candidate?

[00:34:21] Joe F: Sorry, can you repeat that?

[00:34:23] Kevin Rowney: Yeah. Do you think the H H L algorithm would be a primary commercial supremacy candidate for Uh,

[00:34:29] Joe F: um, so at the moment, the bottleneck in that I would say is Hamiltonian simulation. Uh, if we can get around that, uh, then it's, the algorithm itself is very compact, so you can do it on small numbers of cubits.

So yeah, I would see if we can get a bit better at how we do, maybe if we eliminate doing matrix exponential. So, , it wouldn't be hhl necessarily, but faster than your algebra. Yeah. I can see that as being, uh, as being an early use case. Accelerating linear, your algebra.

[00:35:00] Kevin Rowney: Mm-hmm. , Uhhuh, . And, and would you be willing to, again, speculate on a timeframe for when that that eventuality

[00:35:06] Joe F: might, that's pretty robust.

I mean, that's, that's not robust noise. . So, um, that's, yeah, I mean, you're looking at full tolerant systems for that, right? Yeah. Uh, I would say you need probably, you know, kind of a hundred full tolerant qubits, something like that. So similar time to the full tolerant chemistry advantages. Mm-hmm. , I would say.

Mm-hmm. , Uhhuh . Yeah. Yeah. Um,

[00:35:27] Kevin Rowney: so, but, but, but before our rsa, you think before Oh yeah,

[00:35:31] Sebastian: course.

[00:35:32] Joe F: Yeah, yeah, yeah, yeah, yeah. So, I mean, I would say you can probably start seeing very interesting things on a system that is maybe about a 60th of the size of the one you would need to solve, uh, to, to break RSA 2048.

And

[00:35:48] Kevin Rowney: so in comparison to this, uh, this global risk, uh, analysis, global global risk institution report, that I think puts what you said around 10 to 15 years. 14, yeah. So

[00:35:56] Joe F: I, I mean, prediction. Prediction, you can say what's more slow and work backwards from that. So like how many Dublins are

[00:36:03] Sebastian: in between? Um, well, and I guess to your point about the, um, the power of industrial scale investment and capital mobilization, if we get a realization of fault tolerant cubits, even in small numbers, that would be the thing that would motivate that level of capital investment

So from, from one fault tolerant to 6,000 fault tolerance, probably a lot faster than, um, than the, the, than the, uh, the, the road to

[00:36:29] Joe F: that point, it. It's hard to know. Um, yeah. You'll run into issues. It'll depend on the technology. Um, you'll run into issues with just the physical size of the cubits. Mm-hmm. . Um, so it may require maturization Right, right.

To take hold as well to like get down. Right. Um, you know, at the. Physical Cupids are for, superconducting systems are fairly large. Yes. Um, so, you know, I, I don't know, like if you need to make 6,000 copies of the thing and have it in a giant facility, right. Um, it might just be prohibitively expensive.

That's right.

[00:37:10] Sebastian: That's

[00:37:10] Joe F: right. Well, probably not actually. I mean, I guess it costs a couple of million for an individual setup, so, , you know, you can multiply that up. You're still well below a Yeah. A well below a two mil. Uh, sorry. A two nano meter fab. Right, right,

[00:37:27] Sebastian: right, right. Yeah. Well, this has been an amazing discussion.

Really interesting. Yeah. Thank you. Really nice. This is, it's exactly what I was hoping it would be. Joe . Thank you. This is a, an expanded Twitter thread into a, an hour of discussion, which is exactly what I wanted. Um, any, anything that you'd like to sort of end on any. Last sort of observations or call to action or, or admonishment to just chill out and relax and, oh,

[00:37:54] Joe F: so, so now that I've Yeah.

Now that I've publicly alienated all my former colleagues and also, uh, and also, uh, the, the people going after the tech industry. Um, yeah. Yeah. Um, look, uh,

[00:38:09] Sebastian: any bridges you'd like to repair? .

[00:38:14] Joe F: Yeah. So what I would say, uh, you, you know, , what I didn't talk about so much here is, you know, from the industry side, it is really difficult to build quantum computers, right?

So I really do have an appreciation for how hard, uh, the work of the experimentalists is in this. Um, and I don't mean to minimize that at all. Um, but what I would. is that to get to this future, there are different things we need to be working on. We're not currently working on them all. Um, and it serves us to be optimistic about the future if we want to get there quicker.

Mm-hmm. so you can look at the future if you believe this is what the future looks. . If you take this optimistic view of, uh, of the disruptive power of quantum computing, then it tells you there's certain things you should be looking at now that people are not looking at. No one works on data structures, which is insane.

Yeah. Wow. And you, there just aren't data structure papers for quantum computing. There's like a handful. Um, it, it's crazy. It's, it is a massive part of algorithms. So when we

[00:39:26] Kevin Rowney: talk about, it's also numerous prominent scholars who are so saying there's huge amounts of room for, for algorithmic development as well, we.

[00:39:32] Joe F: We don't look at the systems as anything other than monolithic chips for the most part. Mm-hmm. . Mm-hmm. or like trying to network together our monolithic chips into a mm-hmm. , a larger processor like we do need to start thinking about, you know, about how the evolution of this goes. How we make components that can work together.

Mm-hmm. , um, . I mean, there's just a lot of scope in terms of things that are not being focused on at the moment that would be clearly needed If you are going towards a future where quantum computers start to look more like conventional computers. Mm-hmm. , I don't mean necessarily my MacBook or something like that.

I mean just even large super supercomputers or whatever. Like even if you. You know, even if you're constraining it in that point of view, you still need them to be able to talk to the outside world. You still need mm-hmm. , there's still lots of different things you need mm-hmm. on that path. Um, and yeah, I, I just, I would, I think I would stress that, you know, this future, if we're gonna get there, it's going to be built by people, by optimists, right?

Mm-hmm. , it's not, mm-hmm. . It's not going to be built by people who accidentally discover it's possible, right? just cause it takes such sustained effort over such a long time sustained effort. .

[00:40:58] Sebastian: Yeah. And I think your point, I think the point of having to think more deeply about that, that optimistic, uh, horizon of quantum computing, , I'm starting to think maybe that's where your name of your company came from.

Uh, yeah. , it requires optimism and it required sustained effort over a long period of time. And the, yeah, the. , what we see in the public discourse is often just too short term. It's either short term positive or, you know, overly optimistic or short term, overly pessimistic. Yeah. And really the ingredient that we need to try to eliminate is that short-termism.

[00:41:37] Joe F: Yeah. Yeah, yeah, exactly. And like this is, you know, one of the reasons why doing, you know, for, for me at least, taking the startup path was the right route. Um, because it gives you clarity of purpose. Hmm. So. . When you're in academia, there's a lot of different things that are competing for your attention.

Mm-hmm. , lots of different projects you can be working on, and it's quite common to jump from one project to another. Certainly I did that. Mm-hmm. a lot. Mm-hmm. worked on lots of different things, but if we want to get to a point, Where we develop something into a real technology, then it requires focus and sustained effort to develop it.

Not to the point of view of just showing something's possible, but to actually developing it into a real, into a real working system. And that can take many, many years of effort. Yes. Um, you know, is that possible in larger? Companies. Sure, of course it is. But none of those companies are quantum computing companies.

None of those companies have quantum computing as its core mission. Well, like so they can let go of it. Hmm. They can decide that actually maybe, you know, maybe we're gonna deprioritize that for us. That is what we're doing. Right. And if it does not work, then the company doesn't. .

[00:42:59] Sebastian: That's such a great articulation of, of why startups are so critical to, to any industry, but to tech in particular because of that, that make or break, right?

It's that level of investment in trying to get the technology over the hump into a product that actually people will pay for . And if you don't, that's the end of the startup. It's, it's incredibly, it's motivating focus. Yeah. Yeah. Well, thank you so much, Joe. We really appreciate this. We appreciate your time and your perspective.

Really,

[00:43:30] Kevin Rowney: really great, Joe. Thank you so much. Sure,

[00:43:32] Joe F: no problem. Thank, thanks very much for,

[00:44:11] Kevin Rowney: Hey, uh, welcome back again. Another fascinating interview. I, one of the, Sebastian, one of the things that really stood out for me from this dialogue kind of a surprise, uh, but it was to hear Joe's perspective on. Essentially dialing down his, the level of enthusiasm we should have about the NSK era, quantum computers.

And he has a longer term outlook, which is quite positive. Mm-hmm. , but he's skeptical about this, this n era. I mean, I, I think just for the benefit of our audience, we should be filling in our, our acronyms here to be more clear. But, uh, some people know this already, but in this stands for n I. Noisy, um, intermediate scale quantum computers.

So that's the, I think a fair description of many of the commercially available machines right now. All of them. Yeah. Yeah. Right. Exactly. Like a higher rates and, you know, low coherence times, uh, and, you know, not very many qubits. Right. But, you know, many people have been, I think for a long time, uh, placing serious, uh, reputation bats Right on thinking that there could be a quantum ancy break.

in that category sometime within reach in the foreseeable future. Right. Joe is pretty negative on that and, and in somehow who is, yeah. Right. Yeah, yeah,

[00:45:18] Sebastian: yeah. And the, the phrase was, was coined by John Preco. I was a professor at Caltech and quite well known in the field. Um, and it was, oh, he's a, yeah, he's, he's definitely a name.

Um, and yeah, he was thinking about, you know, a couple hundred. Up to a scale of a couple hundred qubits, but, but mostly not noise corrected or not moi noise, uh, mitigated. So, you know, the, the constraints with, uh, the, the length of the circuit, the depth of the circuit that you can, or length of operation and depth of the circuit you can run.

Um, and yeah, Joe was, you know, there, there are a lot of people who were trying to use those NSC machines in conjunction. Uh, classical in, in, yes. Um, variational type, uh, settings, variation, quantum, iGen, solver, uh, Q A A and others. Um, and, and

[00:46:06] Kevin Rowney: possibly some ML applications as well. Yeah, and

[00:46:08] Sebastian: there might, and I mean, I still remain, um, Guardedly optimistic, but I think that where I definitely agree with Joe is that, um, this, this era, this n era is necessary, right?

We, we need Sure. And he talked about co-design and I think that's a really powerful, uh, um, tool for advancing. , the technology, uh, where, you know, the experimentalists build the best machine they can. The theorists get to test out their ma machine, that machine and, and frankly, the technologists, the computer scientists who are new to this whole endeavor.

get to also get their hands on real hardware and apply what they know about, uh, software engineering Complexity theory. Yes. All of the other disciplines. It's really, it's one of the things that I find the most fascinating about the field is that interplay across domains in this co-design phase before, as we explore all the, the challenges to actually actually getting to that early fault resistant.

I thought that was cool too. He called out fault resistant versus fault tolerance. So he thinks, You know, as the gate Fidel is, as the noise gets better controlled and diminishes the, uh, the threshold for usable, uh, uh, com, computational power drops as well. So he's kind of thinking, you know, noise resistant, not fully noise tolerant or fall tolerant.

Uh, which I think is, is that's, that was certainly a cause for optimism. . And he was saying, I thought that was really an interesting way to sort of think of, The evolution, right? So you've got the Nisra, which is sort of a necessary experimental stage. You've got early noise, uh, resistant maybe in the a hundred, 200 cubitt range where he thinks there'll be sort of niche scientific applications that come out of that.

And then, , um, you know, when you get up to large numbers of hundreds, maybe a thousand, uh, noise resistant, um, uh, qubits, you get to the topic that you are really excited to get to, which is compiler designers.

[00:48:08] Kevin Rowney: Absolutely. Absolutely. I mean, just as amazing that he was, uh, able, that his startup and, uh, his team are working on like two incredibly hard and very interesting, uh, domains.

I mean, one is of course quantum computing and this rims, the other is compiler design, which is never for the faint of heart, right. Right. But the intersection. Like, you know, advanced QC algorithms to together with compiler optimization, you know, in this, uh, this, the follow on era after nsk, I mean, he's asserting that there is a gigantic possibility, right?

Yeah. In about 10 years or so of being there, being general purpose, right. Optimization techniques for a compiler isn't that amazing? To compile basically optimizations of, of Plano everyday loops down into Grover algorithm and similar, yeah. Yeah. It's like, wow. I had, I hadn't, I really hadn't. No

[00:48:53] Sebastian: idea. That, and I've, you know, I'm, I'm guilty of actually like going to his website and seeing talks by his team and stuff at conferences, and I still hadn't fully taken that on board until he talked us through it.

When he, he said, you know, uh, whatever. Yes, it was speeding up a wild loop like, You know, it, it, I was under the impression that there was these sort of big bang, kind of, you know, shores and grovers and things that, that were, um, you know, monolithic in his terms. He actually was also talking about, uh, system design and, and how everything that we're building now is monolithic and he's thinking in a much more modular mm-hmm.

and, uh, sophisticated system design kind of way. Where, you know, I made the joke during the conversation you can sort of throw a minus Q on your compiler flags and get the benefit of QS in the cloud. Yes. Doing, you know, optimizing your, your wild loops, like

[00:49:45] Kevin Rowney: that's just incredible. Almost like an Nvidia card or something, right?

Yeah,

[00:49:48] Sebastian: exactly. Exactly. Yeah. Yeah. So that was really, really exciting, uh, to hear. And I hope, I hope our listeners enjoyed it. And, and I also wanted to, to, uh, add a note, um, for all our new listeners, we, we may have noticed a little bit of a spotty release. Schedule over the last, uh, couple months. We are still endeavoring for biweekly releases, so we're trying to do two episodes a month and we're, we're back on track.

We had some logistics issues, uh, with some of, of the, uh, the work that goes into actually bringing this podcast to you. But we've cleared those up and we've got a good pipeline of guests coming up. We're really excited about the shows that are happening in the,

[00:50:27] Kevin Rowney: there is just such as podcast live, sometimes interruptions, but we, we endeavor to do better.

Yes, that's right.

[00:50:33] Sebastian: Always .

[00:50:35] Kevin Rowney: Okay. That's it for this episode of the New Quantum Era, a podcast by Sebastian Haer and Kevin Roney. Our cool theme music was composed and played by Omar Costa Hato. Production work is done by our wonderful team over at Pod five. If you are at all like us and enjoy this rich, deep and interesting topic, please subscribe to our podcast on whichever platform you may stream from.

Un even consider if you like what you've heard today, reviewing us on iTune. And or mentioning us on your preferred social media platforms. We're just trying to get the word out on this fascinating topic and would really appreciate your help spreading the word and building community. Thank you so much for your time.

Creators and Guests